36: Computational mechanics for engineers

For UTC, this specialty is not just one of the numerous domains in which the university is investing as a structure than can be usefully integrated to teaching science and technologies. It is a specialty that was launched in the 1970s by three pioneers: Jean Louis Batoz, Gouri Dhatt and Gilbert Touzot, and has become a key subject matter taught at UTC, with over 300 PhDs, confirming that UTC is indeed a leader in this field.

Computational mechanics is widely used and UTC wanted to celebrate its 40 years investment by organizing a conference Nov.26–27, 2015. The aim was to recall the pioneering days and also to update the attendees on the latest developments and on the way modelling tools have developed here, impacting an increasing number of areas.

These tools are now commonplace in mechanical engineering industrial sectors but now extends to numerous areas where multiphysical behaviours can be modelled. “There is still plenty of room for further improvement, in for instance in bio-mechanics and in environmental sciences”, explains Jean Louis Batoz, emeritus Professor at UTC who underscores the prospects that lie in physics applied to complex, multiphysics and multi-scale environment such as urban physics.

Another key-note feature of the November conference at UTC was the award of a honoris causa doctorate to Professor Klaus-Jürgen Bathe, one of the most eminent pioneers of this specialty. Although born in Germany, Professor Bathe has been working for 40 now at MIT (Cambridge, USA) where he was able to tackle some of the most fundamental aspects of the topic, as well as preparing associate software packages for use in industry. He has authored several books, published hundreds of papers and is involved in the editing of some twenty international peer-reviewed journals.

Ever since nuclear chain reactions were modelled at Los Alamos or since digital fluid mechanics was invented by NACA, the predecessors of NASA, back in the 1940s, to today’s systematic use of digital tools in industrial design work, computational mechanics has gone through all sorts of stages and phases, with the constant objective, however, of improving on the models to gain in time and precision.

Through lack of time, numerous difficulties to carry out scale-one experiments and the advent of the first generation of digital computers, the end of WWII and especially the after-effects of Project Manhattan, signal the birth of computational modelling. The initial investigations consisted of simulation of nuclear chain reaction propagation, which in turn led to the assembly of the first atomic bombs.

When we recall that the computers of that time took several second stop carry out a multiplication, a major change came in the 1970s when processing power of computers rose significantly. Mechanical engineering modelling tools began to be used in industrial sectors. “At the CETIM [technical centre for mechanical engineering industries] and at UTC, the first series of research work began in 1972–1975, leading to the first software packages used by industrialists in the 1980s”, explains Mansour Afzali, Senior Science Delegate at CETIM.

Decomposing objects

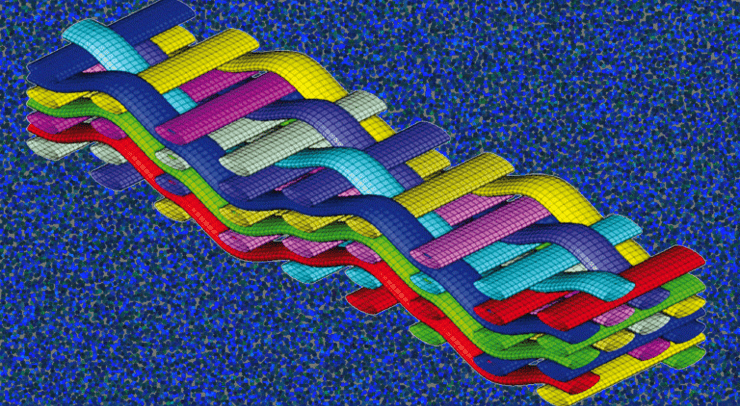

One of the most commonly used methods is that of “finite elements” where space and objects are subdivided into ‘loops’ (or elements) which are as simple as possible. The system of complex equations that characterize the complete system is obtained by assembling the data calculated for each loop. “In the early days of computational mechanics, the work consisted of making models ‘characterizing’ mechanical, physical objects and to ensuring they were coherent with reality and the laws of mechanical engineering”, adds Mansour Afzali.

The objective is to model the tiresome process of manual design. It begins with a technical drawing, with which a series of calculations is made to ensure compliance with the laws of physics. The next step is to assemble a physical prototype which undergoes a series of tests. Depending on the results obtained, the process reiterates, sometimes form the starting point, so that errors detected can be corrected.

“The process can be reiterated as many times as is necessary, until we obtain a prototype that passes all the tests of the specification”, underlines Mansour Afzali, adding that modelling allows scientists to carry out a large number of tests to avoid long and costly iterations. Computational mechanics has led to a two-fold reduction in the time needed to design a car and even design the Airbus 380 solely on the basis of computer modelling.

Improving the models and the software

As and when new computational mechanics tools became available in the 1980s, numerous tests were carried out to detail the characteristics of materials and to certify the results of the calculations. Work like this enabled improvement to be m de on the models as well as for the software. Significant testing was carried out on software in the 1990s to improve their reliability and ‘robustness’.

Ever increasing numbers of young engineers are being trained with these tools as they spread through industrial sectors. Computer sciences develop rapidly and digital modelling is now an integral part of CAM software (computer-aided manufacturing). “Progress like this, with increased model robustness and homogeneous modelling approaches allow you to have significant time gains and also the number of tests needed”, details Mansour Afzali. Computational mechanics tools are becoming more trustworthy in the eyes of industrialists and have even become priority tools.

Numerous possible solutions

The processing power of today’s computers grows constantly and it is henceforward possible to carry out complex calculations to optimize products. Given a set of parameters, constraints and industrial objectives, the optimization process consists of identifying the best solutions among numerous possible solutions. “Optimization is often due to the work of skilled engineers because it is necessary to make relevant decisions at various design stages”, explains Mansour Afzali, for whom the contribution of experts is necessary to certify the results of the computations.

Faced with a true explosion of processing power and with increasingly homogenous models, mechanical engineering research now integrates probabilistic approaches to assess the life expectancy of components that take into account the ‘variable’ factors of the design parameters and uses. They also explore increasingly complex materials, such as the composites. New methods are coming on line, enabling scientists, for example, to take into account the speed of deformation of materials to model material reactions under crash-shock conditions. Today, the tools can be used to design a humble tin can or the mechanical response off the Eiffel Tower to climate or seismic events.

“All the component parts of a mechanical structure can be modelled and computed and for this purpose, industrialists now use computational mechanics”, adds Mansour Afzali. This tool has become commonplace and young engineers often place a total yet blind trust in the method, estimating that the results have the same level of reliability as would be obtained via full-scale testing. And Mansour Afzali insists on the role of the engineers “whose responsibility it is to verify the hypotheses and the models used and never forget to assess the quality of the computational results”.

Die-stamping of car parts at Renault factories follows suit to a series of digital design steps that run from initial design steps to final testing and certification. While some of these steps are standardized, and can be managed by less and less qualified operatives, more complex operations call for the know-how and knowledge of specialist engineers.

“Modelling, in a sense, is make-belief convincing oneself that the work at hand is “for real” and then trying to reproduce the same effects in reality”, says Frédéric Mercier, research engineer with Renault, posted to the die-stamping department. This stamping operation consists of bending (deforming and shaping) a plane steel surface to obtain a 3D structure which, consequently in most cases, is no longer planar.

The geometric shaping involved is not also self-evident and, moreover, the part must comply with a series of use requirements, such as those compliant with crash-rests. “A good model must, therefore, be close to reality”, explains Frédéric Mercier. The design chain for a car parts integrates the part design, the calculations needed to die-stamp them, modelling of shock characteristics. The number of full-scale tests is significantly limited, inasmuch as most of them are included in the modelling process.

Easier commonplace operations

What the engineers does is to model the car using the so-called “thin shell” finite elements method, i.e., adapted to thin structures. This kind of structure accounts for some 80% of all commonplace products, with simplified techniques and tools so that they can be implemented by operatives after a short specialist training session. “Use of these tools has become ‘democratic’ and the personnel with higher level diplomas and degrees such as the PhDs and the engineers can devote more of their time to doing more complex tasks” adds Frédéric Mercier.

Optimisation is for the experts

One such complex task is optimization, which has the objective to identify the best configurations for a given list of constraints. For example, if the target is to reduce CO2 emissions, one improvement consists of decreasing the weight pf the vehicle while preserving its crash resistance and acoustic factors. Since the last mentioned criteria are antagonistic in terms of steel sheet thickness and rigidity, the optimization process consists of finding several possible solutions.

Then other arbitration criteria come into play to make the choice among these solutions, factors such as cost or ease of production of the part. The optimization calculations are often more complex to carry out when the shock resistance constraints are taken into account. It then becomes necessary to find the right models and the right tools in order to avoid excessive computer time. “It is not at all reasonable to launch a calculation process and a computer run that will last for more than 50 hours!” details Frédéric Mercier.

Adapting to special cases

Optimization does call for some precautions but this is partly due to the fact the standards applicable are multiplying, are different according to the market country, they relate mainly to safety factors, performance levels and the environment. “For shock situations, over a dozen were modelled”, Frédéric Mercier, citing the pedestrian, frontal, and car side shocks .…

The needs for expertise are not just limited to optimisation programmes but relate also to processes such as the identification of digital criteria to detect possible “aspect” faults or to use of composites. Composites tend to disintegrate under shock, a transformation of the material’s original features. “The software packages we use are not suitable for this kind of crash behaviour, even if it is possible to set up ways to take this into account”, underlines Frédéric Mercier.

In respect to potential improvements, Frédéric Mercier also defends some personal thoughts. He expressed, for instance, the wish that software designers make more ‘user-friendly’, more modern interfaces. “For example, they could draw some inspiration from Apple and propose haptic tools”, suggests Frédéric, who dreams of “digital model programmes for smartphones® and i‑pads®”. On a more serious vein, another improvement path would be in development of models that comply the most closely possible with the laws of physics. And, even if the processing power of computer has risen tenfold and more, the issue of reducing computer times and runs is still a topical debate.

Computational mechanics, at the base of new products product perspectives or training tools, is now widely used in industrial sectors. Nevertheless, for uses likes these, the underlying challenges and the results expected do not necessarily imply use of the same modelling tools, nor the same skills for those who implement the tools.

From a simple ball-point pen to the various latest Airbus A380 tat fly the world over, industrial design always begins with a modelling phase of the future product, on a CPU display screen. Industrialists have a means here not only to conceive and shape new artefacts — thereby limiting the need for test rig prototyping — more rapidly and at lower costs to meet an increasingly demanding specification as best as possible.

The object is drawn, it mechanical properties modelled with its environment and use constraints. From that point on, “it becomes possible to test large numbers of possibilities so to optimize the design of the object with respect to the given specification and to the usage envisaged”, explains Professor Francisco Chinesta, Ecole Centrale de Nantes, a specialist in computational mechanics.

Different expectations, depending on the objectives

For engineers, the difficulty in the exercise is to identify the level of modelling best adapted and with an objective to keeping design time within reasonable bounds. “Necessarily, we must adapt the model to the objectives”, underscores Francisco Chinesta, adding that the constraints of reliability, standards and risks are not of the same order for the ball-pin pen as for the double-decker wide-body aircraft. The difficulties meet to implement models, to think through and correctly plan for optimization and the computer time needed for the calculations … all depend on choices and decisions made at the very start.

Today, certain models are so complex that even powerful computers take months to calculate and come up with a result. “Whatever the outcome “, adds Francisco Chinesta, “optimization tasks may lead to a result that does not eliminate all the risks”. The work load, as he sees it, must be proportionate to the complexity of then system to be modelled, to the challenges and to the clients’ expectations. For example, “an error in a weather forecast (say, less than a week ahead), is still something that we find acceptable, because we all know that the system is highly unpredictable”, explains our research scientist.

Challenges and expectations are very different, whether it is an aircraft or model to train surgeons, for specific operations. In the latter example, we are not modelling reality but we do give the surgeons a ‘hands-on’ feeling that is as close as possible to real life scalpel work … The perception here accepts a relatively large margin of inaccuracy, and so it is not really necessary to design a very accurate model for this case.

Increasingly accessible modelling tools

Whilst we can observe the application of more and more stringent constraints, in terms of safety factors in specifications, the trend now is to democratize the tools and workshop or design room equipment. Using well tested and certified models for standard optimization protocols needs less and less skilled operatives to work with the tools. “Today, some modelling is carried out by specialized technicians and there is no need to have support from qualified engineers”, explains Frédéric Mercier, a research engineer who works with the Renault Automobile Group and is an expert in computational mechanics.

If as Francisco Chinesta imagines, the most complex problems still require the attention of highly qualified experts, lots of small scale applications will appear on the market, relatively easy to use and possibly even downloadable to smartphones®. The objective here is to make modelling a commonplace concept, with rapid and easy operational modes, that need less and less means to be implemented. For the qualified engineers, the aims should be to learn as early as possible to work on concrete problems found in industrial sectors.

The demands for better quality, less ‑or zero) risks, as well as new standards applicable , are forcing the actors involved to make the demand and then challenges more explicit and then to design an adapted model to the case to hand. It then becomes the responsibility of the research engineers and lecturers to ensure that there is a close connection with the realities of the engineers’ professional world and, consequently, to adapt the courses accordingly, basing the exposes on industrial reality.

Perhaps this increased awareness where industrial reality is taken into account served as a driving force that made UTC one of the pioneer institutions and a current French leader in computational mechanics.

The sheer power of modern computers and availability of modelling tools which have become more and more reliable have led to the development of optimization software packages. The corporate world is full of more or less specialized demands, converging on the purpose of reducing production time, costs and improving product quality.

The list of intrinsic qualities expected of a new industrial product — silent, comfortable, light, clean, economical, rapid, accurate, safer, aesthetic … is much longer than it was before. And, to comply with applicable standards, and consumer expectations can almost become a nightmare for system designers. And only one word fits the dilemma — o p t i m i z e!

For an engineering designing a car, the safety factors must be maximized, cost factors minimized (in production, design, utilization …), and comfort must be maximized while lowering fuel consumption … and the data related to the problem appears as a long list of constraints and objectives to be satisfied.

“The main difficulty”, says Hossein Shakourzadeh, scientific advisor with Altair, a company specialized in development of modelling software packages, “is to describe the optimization target precisely”.

Road and airborne software

One question: how are you supposed to define “comfort”? Another: what must be taken into account when estimating costs? There are many questions and no really simple answers. And the latter depend in the solutions chosen for the new product envisioned. In order to assist the engineers, the professional software editors are now proposing a fairly wide range of optimization tools…

“We have to come up with solutions that meet the needs of all our customers, whether they build cars or planes”, underlines Hossein Shakourzadeh. In many instances, different problems can be solved using the same tools. Thus software to meet the greatest number of needs was developed, and by changing the parameters, they can be used in a maximum number of situations.

Increasingly specialised modules

Over and above ‘parametrable’, generic tools, which are the closed shop domain of research engineers, we see more and more specialized modules being developed to implement optimization protocols for highly specific situations. For example, the Squeak and Rattle Director from Altair aims at minimizing the friction noise and ‘squeaks’ from various pieces of equipment and which can change through time. A typical example here is the car dashboard, but the tool used is not just restricted to this particular case.

“The software used complies with a demand from the industrialists to save time when designing parts and it transfers certain skills to the software editor”, underlines Hossein Shakourzadeh. The engineer invited to design a dashboard does not have to be an optimization technique specialist at the same time — and hence he/she can concentrate on the job at hand — dashboard design.

The use of optimization packages began in the 1990s, and rely on improvement of previous models and tools. The attractiveness of the new tools stems in fact from the desire expressed by the industrialists to reduce design time, also from the economic race that forces them to constantly renew their offer with new products. Another important factor is the notable increase in the number of standards applicable to the area and the constraints with which new industrial products must be compliant. Optimization protocols relate not only to the world of mechanical engineering but now extends to almost all areas. For example “cost optimization tools that aim both at reducing costs as far as possible and to maximizing product quality are now used a lot”, underscores Hossein Shakourzadeh. Altair even proposes a software package designed to help companies optimize their investments in software and associate equipment. So, when shall we see the advent of optimization tools to optimize the use of optimization tools? That is the question!

Computational modelling is not limited to industrial artefacts. The research tea in computational hydraulics housed by UTC applied this approach to flood situations or coastal water submersion, and also to many other subjects that have a connection with water, waterways and navigation …

For the analysis of floods and coastal submersion, public authorities now use computational modelling to draft policy and regulations in this area. The computational hydraulics laboratory (LHN) created in 2003, housed by the UTC Roberval Laboratory is the work place for 3 research scientists of the French Ministry for Ecology specialists of these issues.

The group has been working together since 1991 and focuses on the question of risks related to water notably faced with the needs expressed by the Risk Prevention Directorate General. The group also investigates river and sea transportation, energy recuperation from currents and waves and looks at the consequences of impending climatic change, in terms to rising sea levels.

Specific tools

Ever since the end of the 1970s when computational tools specific to water problems were being developed and introduced at the CEREMA Directorates for Waterways, Rivers and Maritime Transport, Philippe Sergent, the current Senior Scientific Officer has been working on these questions with his LHN partners. The REFLUX computational protocol — that dates back to the 1980s — integrates a first level of data organization, a computational module and a tool to display the results.

“REFLUX was used up till year 2000 and was then gradually replaced by the EDF protocol “TELEMAC” details Philippe Sergent. What is special about these studies is the sheer volume of geographic data that requires tools with a capacity to store and process them. Displaying the results is also difficult, inasmuch as the area involved is measured in thousands of km². “Under these conditions, the calculations are very long compared with what we traditionally see in the mechanical engineering sector,” underlines Philippe Sergent. Another specific feature of the questions is the large number of unknowns compared with classic modelling in industry.

To illustrate, it is impossible to take the seasonal factor into account (effects on vegetation) when an area is being modelled for flood risks. Existence of hedges in built-up areas and the capacity of the soils to absorb excess surface water are also difficult factors to handle.

Optimizing ship movements

Another novelty here is to work with 3D structures to model ship movements. The models serve to better understand the resistance to progression of a ship in a confined volume, for the purpose of optimizing fuel consumption. The shallower the water under the ship’s hull, or the narrower the navigation channel, the higher are both water resistance and fuel consumption.

By varying the vessel speed, gains of between 5 and 10% can be expected. And these figures too can be improved further if the lock management is optimized. “For hydrodynamic models, calculations can take up to one month” details Philippe Sergent.

Pluridisciplinary approaches

Other specific developments relate to cost/benefits analyses used to optimize the approaches needed to face potential situations of flooding and/or submersion. These studies require pluridisciplinary skills in order for the economic, environmental and societal aspects to be taken into account properly. The major challenges today are tied to new hydro-power generation schemes, and also to climate studies.

In a general sense, the ‘blue power’ growth implies that all activities that appertain to the seas and oceans (energy, mineral resources, offshore ports, etc.) offer considerable prospects for future LHN research. Another strong challenge relates to possible sea level rising over the coming 50 or 100 years. With this project in mind, public authorities will need new tools to help them redefine the next strategy to follow if we want to minimize the impacts of climate change.